By

anders pearson

24 Feb 2019

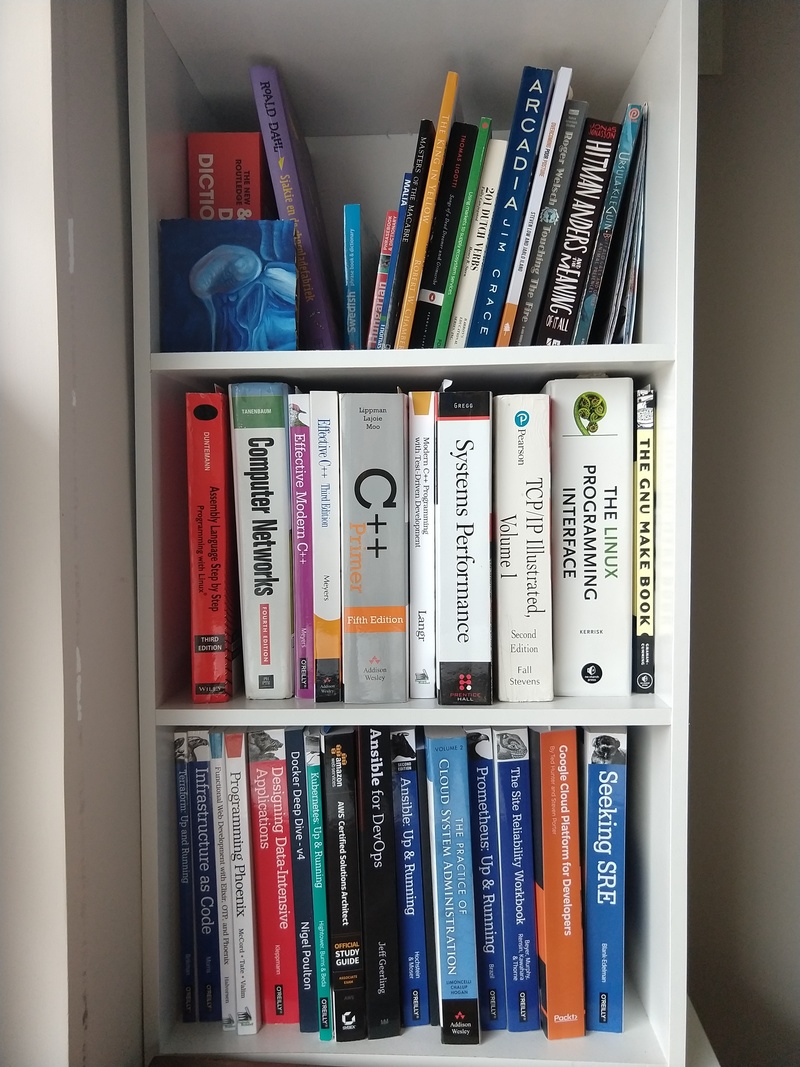

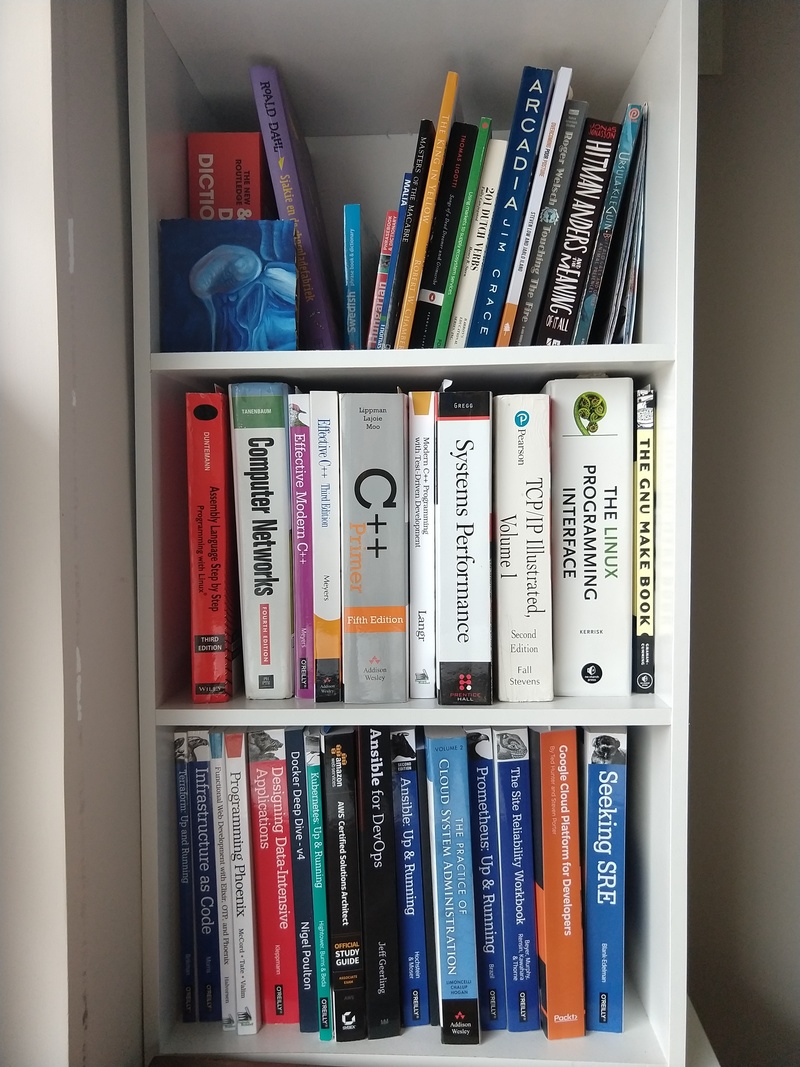

The #shelfie hashtag has been coming up lately. I’m bad at social media, but I thought I’d post mine here.

I moved from the US to Europe a few years ago and I had to massively cut down my book collection. In the last few years, I’ve started accumulating books again, but I’ve been a bit more purposeful this time around. So most of the books on my shelves are ones that I decided were important enough to bring across the ocean with me or that I’ve wanted to have for reference since the move. Fiction and “lighter” non-fiction that I’m only going to read through once I try to buy in electronic formats so it doesn’t take up precious space in my flat.

My books are vaguely organized though not totally consistently. The top shelf here is the really random stuff, including the few bits of fiction I have in physical form, Dutch language books (that’s “Charlie and the Chocolate Factory” in Dutch), and of course, one of my small paintings in the front.

The middle shelf is sort of C++, Linux, Networking, and low level systems stuff.

Bottom is mostly Cloud, DevOps, and SRE related.

These shelves are right below a window so I had a hard time getting a clearer photo without it washing out a bit.

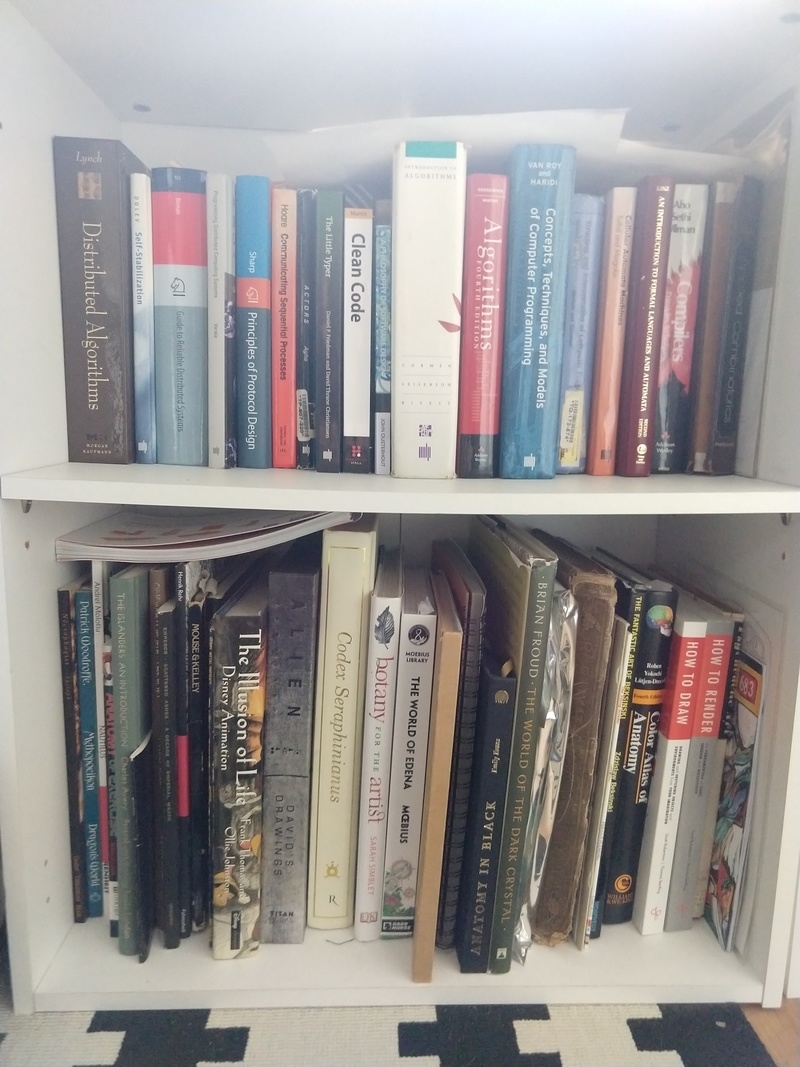

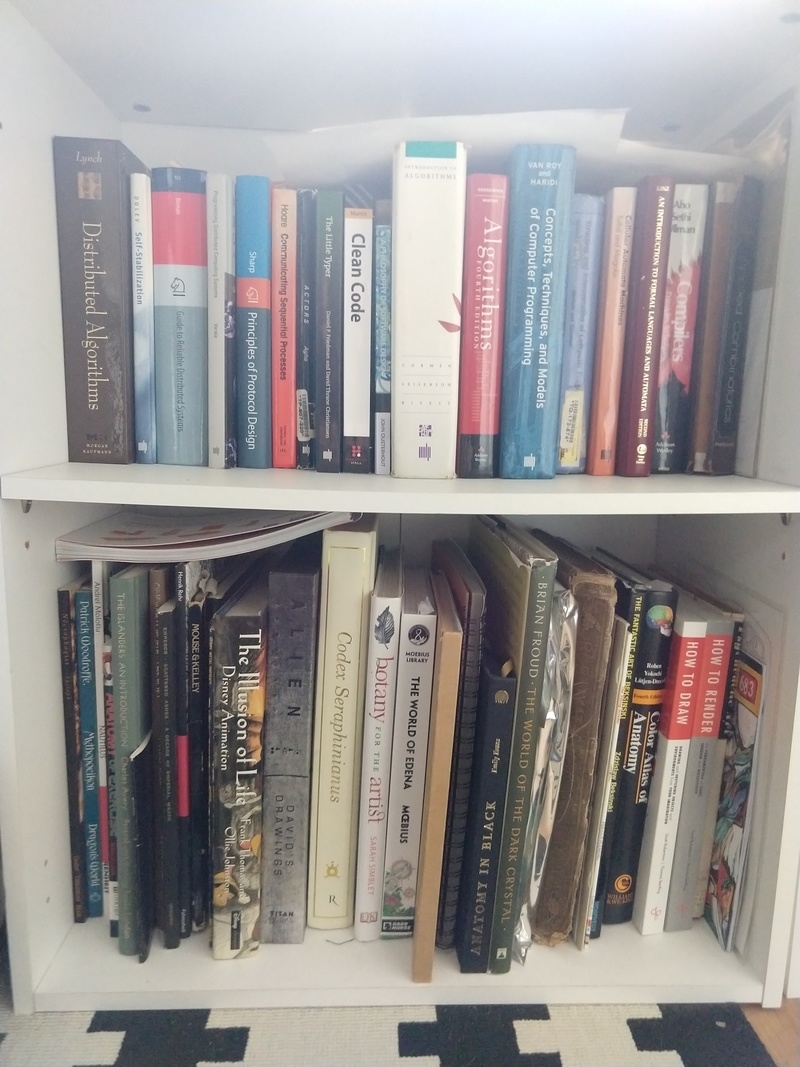

Top shelf here is focused more on CS and distributed systems. Some classics here like SICP, CLSR, the Dragon Book, CTM, etc.

Bottom shelf is the fun one with my weird art books. The foil wrapped one is the NASA Graphics Standards Manual. The old looking one next to it is an 1800’s edition of Elihu Vedder’s illustrated version of the Rubaiyat of Omar Khayyam.

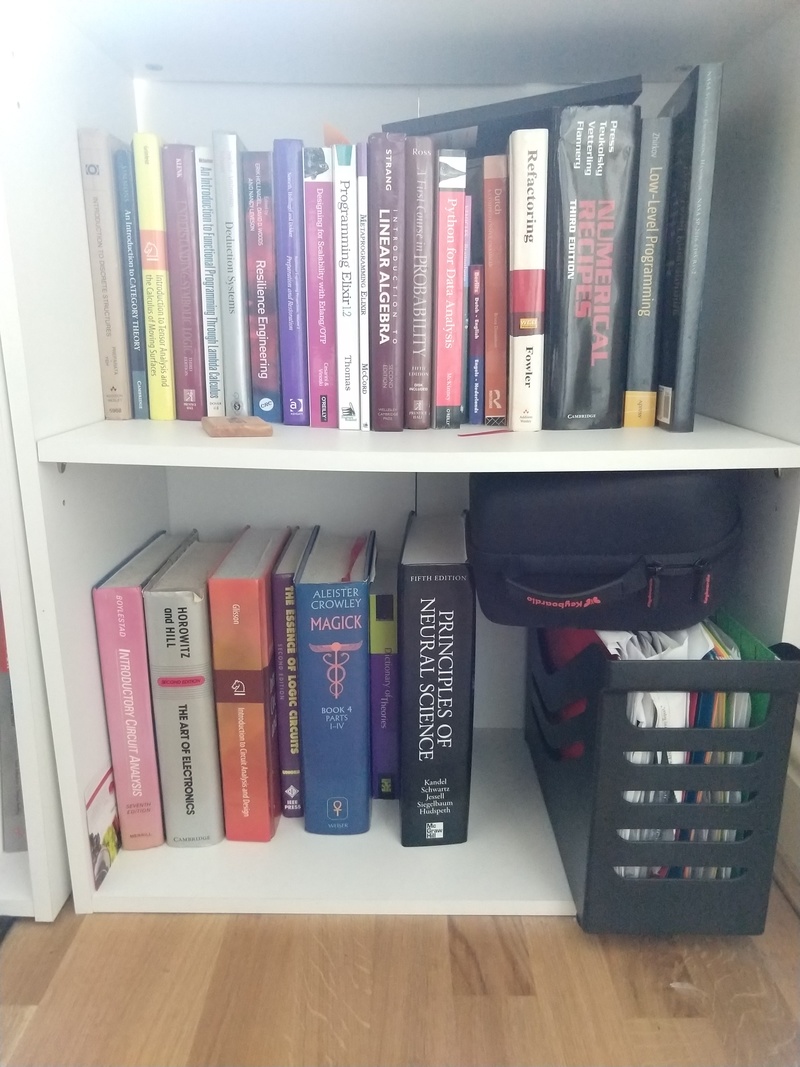

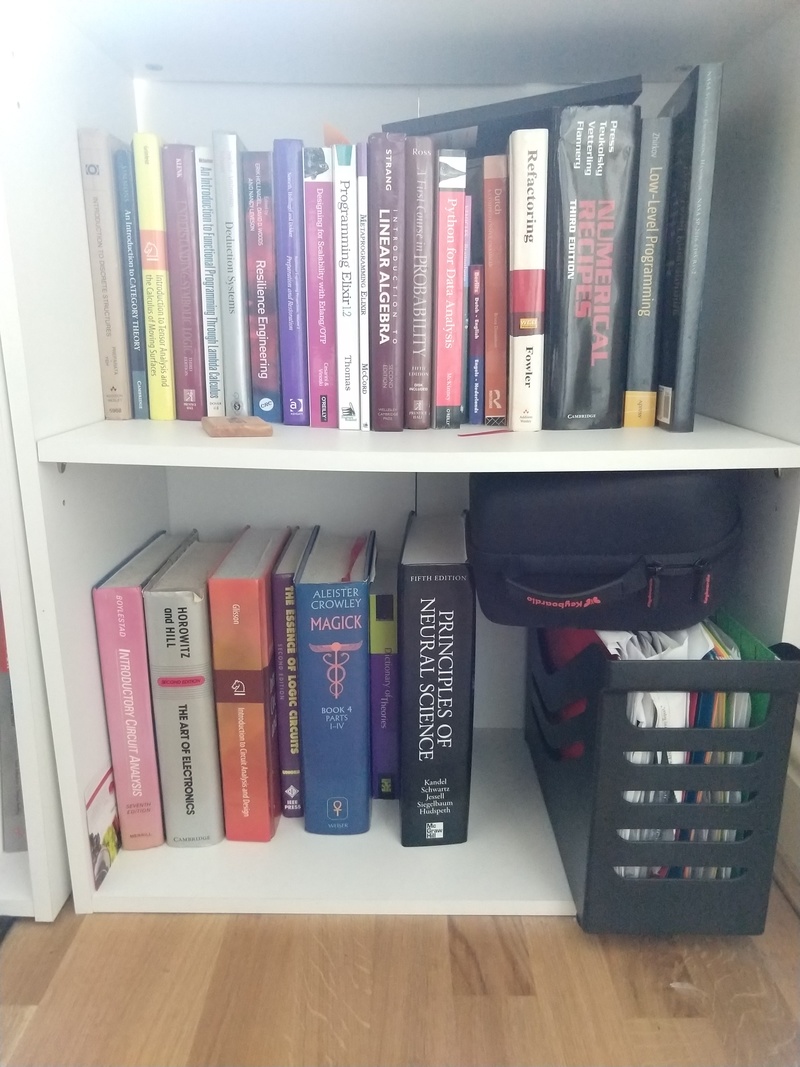

Top here is math and random programming books that didn’t fit on the other shelves.

The bottom shelf has a few electronics books and random large ones that won’t fit elsewhere. The filing box is mostly full of printed out CS papers.

By

anders pearson

14 Jan 2019

It took me a little longer than I’d planned, but here is my yearly music round up for 2018 just as I’ve done for 2017, 2016, and 2015.

As usual, this isn’t exhaustive and only includes bandcamp links. I have no other commentary other than that I enjoyed these. My tastes run towards weird, dark, loud, and atmospheric. Enjoy.

By

anders pearson

02 Jan 2018

I posted my yearly music roundup yesterday, which I’ve done for

the last three years. Today I thought I’d just take a moment

to explain how I go about creating those posts. Eg, there are 165

albums in the last post and I link both the artist and album pages on

each. Do you really think I manually typed out the code for 330 links?

Hell no! I’m a programmer, I automate stuff like that.

First of all, I find music via a ton of different sources. I follow

people on Twitter, I subscribe to various blogs’ RSS feeds, and I hang

out in a bunch of music related forums online. So I’m constantly

having new music show up. I usually end up opening them in a new tab

until I get a chance to actually listen to them. Once I’ve listened to

an album and decided to save it to my list, my automation process

begins.

I’m a longtime emacs user, so I have a capture template set up for

emacs org-mode. When I want to save a music link, I copy the URL in

the browser, then hit one keyboard shortcut in emacs (I always have

an emacs instance running), I paste the link there and type the name

of the artist. That appends it to a list in a text file. The whole

process takes a few seconds. Not a big deal.

At the end of the year, I have this text file full of links. The first

few lines of this last year’s looks something like this:

** Woe - https://woeunholy.bandcamp.com/album/hope-attrition

** Nidingr - https://nidingrsom.bandcamp.com/album/the-high-heat-licks-against-heaven

** Mesarthim - https://mesarthim.bandcamp.com/album/type-iii-e-p

** Hawkbill - https://hawkbill.bandcamp.com/track/fever

** Black Anvil - https://blackanvil.bandcamp.com/album/as-was

** Without - https://withoutdoom.bandcamp.com/

** Wiegedood - https://wiegedood.bandcamp.com/releases

For the first two years, I took a fairly crude approach and just record

an ad-hoc emacs macro that would transform, eg, the first line into

some markdown like:

* [Woe](https://woeunholy.bandcamp.com/album/hope-attrition)

Which the blog engine eventually renders as:

<li><a href="https://woeunholy.bandcamp.com/album/hope-attrition">Woe</a></li>

A little text manipulation like that’s a really basic thing to do in

emacs. Once the macro is recorded, I can just hit one key over and

over to repeat it for every line.

Having done this for three years now though, I’ve noticed a few

problems, and wanted to do a little more as well.

First, You’ll notice that the newest post links both the artist and

the album. This, despite the fact that I only captured the album link

originally.

Second, if you look closely, you’ll notice that not all of the bandcamp links are quite the

same format. Most of them are

<artist>.bandcamp.com/album/<album-name>, but there are a few anomalies like

https://hawkbill.bandcamp.com/track/fever or

https://withoutdoom.bandcamp.com/ or

https://wiegedood.bandcamp.com/releases. The first of those was a

link to a specific track on an album, the latter two both link to the

“artist page”, but if an artist on bandcamp only has one album, that

page displays the data for that album. Unfortunately, that’s a bad

link to use. If the artist adds another album later, it changes. Some

of the links on my old posts were like those and now just point at the

generic artist page.

So, going from just the original link that I’d saved off, whatever

type it happened to be, I wanted to be able to get the artist name,

album name, and a proper, longterm link for each.

I’ve written some emacs lisp over the years and I have no doubt that

if I really wanted to, I could do it all in emacs. But writing a

web-scraper in emacs is a little masochistic, even for me.

The patth of least resisttance for me probably would’ve been to do it

in Python. Python has a lot of handy libraries for that kind of thing

and it would’ve have taken very long.

I’ve been on a Go kick lately though, and I ran across

colly, which looked like a pretty

solid scraping framework for Go, so I decided to implement it with

that.

First, using colly, I wrote a very basic

scraper for bandcamp

to give me a nice layer of abstraction. Then I threw together a real

simple program using it to go through my list of links, scrape the

data for each, and generate the markdown syntax:

script

src=”https://gist.github.com/thraxil/8d858eddd187d8a7a9edb91057d7ee72.js”</script>

I just run that like:

go run yearly.go | sort --ignore-case > output.txt

And a minute or two later, I end up with a nice sorted list that I can

paste into my blog software and I’m done.

By

anders pearson

01 Jan 2018

For the third year in a row, here is my roundup of music released in 2017 that I enjoyed.

One of the reasons that I’ve been making these lists is to counteract a sentiment that I encounter a lot, especially with people my age or older. I often hear people say something to the effect of “The music nowadays just isn’t as good as [insert time period when they were in their teens and twenties]”. Sometimes this also comes with arguments about how the internet/filesharing/etc. have killed creativity because artists can’t make money anymore so all that’s left is the corporate friendly mainstream stuff. I’m not going to get into the argument about filesharing and whether musicians are better or worse off than in the past (hot take: musicians have always been screwed over by the music industry, the details of exactly how are the only thing that technology is changing). But I think the general feeling that music now isn’t like the “good old days” is bullshit and the result of mental laziness and stagnation. We naturally fall into habits of just listening to the music that we know we like instead of going out looking for new stuff and exploring with an open mind. My tastes run towards weird dark heavy metal, so that’s what you’ll see here, but I guarantee that for whatever other genres you are into, if you put the effort into looking just a little off the beaten path, you could find just as much great new music coming out every year. I certainly love many of the albums and bands of my youth, but I also feel like the sixteen year old me would be really into any one of these as well.

OK, I know I’ve said that I don’t do “top 10” lists or anything like that, but if you’ve made it all the way to the bottom of this post, I do want to highlight a few that were particularly notable: Bell Witch, Boris, Chelsea Wolfe, Goatwhore, Godflesh, King Woman, Lingua Ignota, Myrkyr, Pallbearer, Portal, The Bug vs Earth, Woe, and Wolves in the Throne Room.

Plus special mention to Tyrannosorceress for having my favorite band name of the year.

By

anders pearson

21 Dec 2017

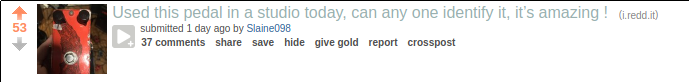

Last night, I was scanning the /r/guitarpedals subreddit. Something I have been known to do… occasionally.

I see this post:

OK, someone’s trying to identify a pedal they came across in a studio. I’m not really an expert on boutique pedals, but I have spent a little time on guitar forums over the years so who knows, maybe I can help?

Clicking the link, there’s a better shot of the pedal:

Hmm… nope, don’t recognize it. Close the tab…

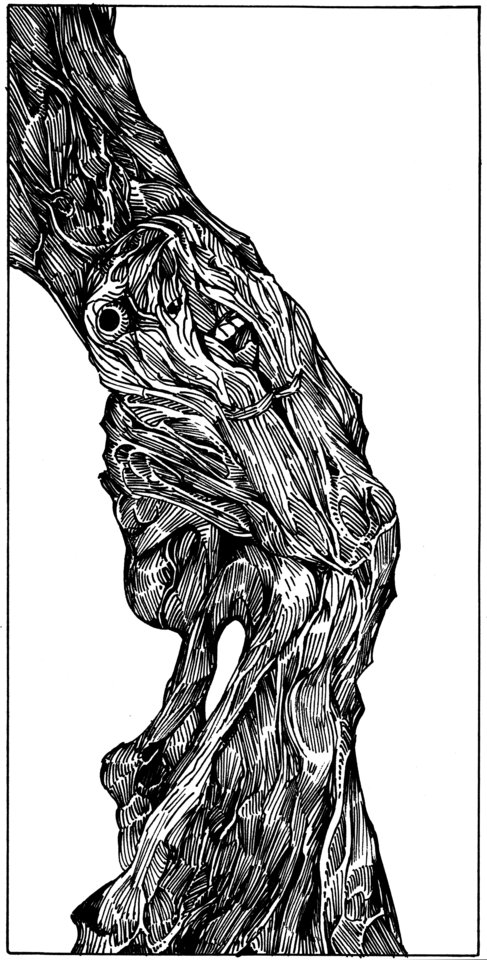

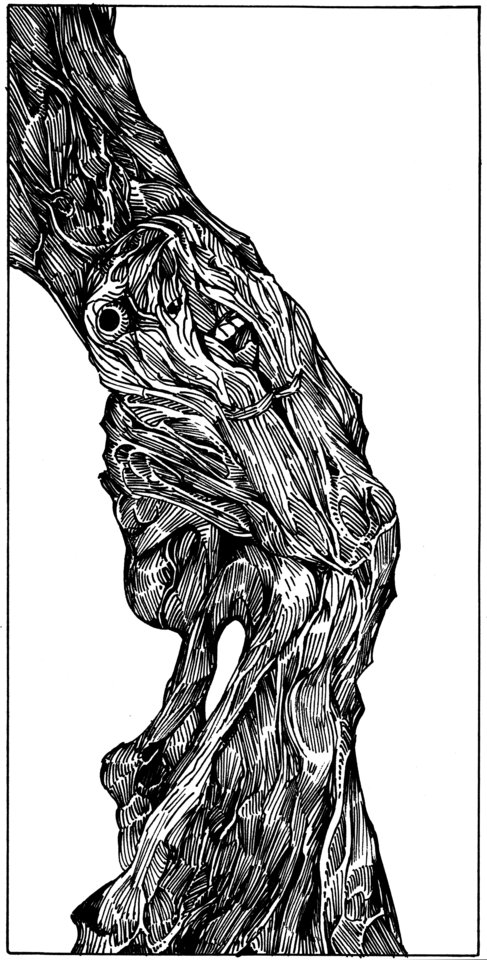

OK, yeah, the pedal doesn’t look familiar, but the artwork on it sure does…

That’s one of my drawings from about 2008.

So at some point, someone out there built a custom guitar pedal, used one of my drawings for it, it ended up in a recording studio somewhere, someone else found the pedal in the studio, took a picture, posted it on reddit, and I stumbled on it.

Anyone who’s known me for very long knows that I post all of my artwork online under a Creative Commons Public Domain license. I’m not a career artist and it’s not worth the hassle for me to try to restrict access on my stuff and I’d rather just let anyone use it for whatever they want. So this obviously makes me very happy.

I’ve had plenty of people contacting me over the years asking to use them. That’s unnecessary but appreciated. My paintings and drawings have appeared on dozens of websites and articles. There are a couple books out there that include them (besides the Abstract Comics Anthology that I was actively involved in). I know that there’s at least one obscure death metal album out there that uses one of my paintings for the cover. I’ve had a few people say they were going to get tattoos, but I’ve never seen a photo of the results, so I can’t say for certain whether anyone followed through on that.

This is the first time that I’ve run into my own work like this randomly in a place that I wasn’t looking.

BTW, no one has yet identified the pedal, so obviously, if you know anything about who built it, let me know.

By

anders pearson

25 Nov 2017

Recently, Github added the ability to archive repositories. That prompted me to dig up some code that I wrote long ago. Stuff that really shouldn’t be used anymore but that I’m still proud of. In general, this got me reminiscing about old projects and I thought I’d take a moment to talk about a couple of them here, which are now archived on Github. Both are web frameworks that I wrote in Python and represent some key points in my personal programming history.

I started writing Python in about 2003, after spending the previous

five years or so working mostly in Perl. Perl was an important

language for me and served me well, but around that point in time, it

felt like every interesting new project I saw was written in Python. I

started using Python on some non web-based applications and

immediately liked it.

Back then, many smaller dynamic sites on the web were still using

CGI. Big sites used Java but it was heavy-weight and slow to develop

in. MS shops used ASP. PHP was starting to gain some popularity and

there were a ton of other options like Cold Fusion that had their own

niches. Perl CGI scripts running on shared Linux hosts were still

super popular. With Perl, if you needed a little more performance than

CGI scripts offered, if you ran your own Apache instance, you could

install mod_perl and see a pretty nice boost (along with some other

benefits). Once you outgrew simple guestbooks and form submission CGI

scripts, you needed a bit more structure to your app. Perl had a

number of templating libraries, rudimentary ORMs, and routing

libraries. Everyone generally picked their favorites and put together

a basic framework. Personally, I used

CGI::Application,

Class::DBI,

and

HTML::Template. It

was nowhere near as nice as frameworks that would come later like Ruby

on Rails or Django, but at the time, it felt pretty slick. I could

develop quickly and keep the code pretty well structured with cleanly

separated models, views, and templates.

Python wasn’t really big in the web world yet. There was Zope, which

had actually been around for quite a while and had proven itself to be

quite capable. Zope was… different though. Like, alien technology

different. It included an object database and basically took a

completely different approach to solving pretty much every

problem. Later, I would spend quite a bit of time with Zope and Plone,

and I have a great deal of respect for it, but as a newcomer to

Python, it was about ten steps too far.

So, in the meantime, I did the really predictable programmer thing and

just ported the stuff I missed from Perl to Python so I could write

applications the same way, but in a language that I had grown to

prefer.

Someone else had already made a port of HTML::Template,

htmltmpl. It worked, but I did

have to make some fixes to get it working the way I liked.

Similarly, Ian Bicking had written

SQLObject, which was a very capable ORM

for the time.

My major undertaking was

cgi_app, which was a port of

CGI::Application, routing HTTP requests to methods on a Python object,

handling the details of rendering templates, and providing a

reasonable interface to HTTP parameters and headers.

It looks pretty basic compared to anything modern, and there are some

clear PEP8 violations, but I remember it actually being pretty

straightforward and productive to work with. I could write something

like

#!/usr/bin/python

from cgi_app import CGI_Application

class Example_App(CGI_Application):

def setup(self):

self.start_mode = 'welcome'

def welcome(self):

return self.template("welcome.tmpl",{})

def param_example_form(self):

return self.template("param_example_form.tmpl",{})

def param_example(self):

name = self.param('name')

return self.template("param_example.tmpl",{'name' : name})

if __name__ == "__main__":

e = Example_App()

e.run()

drop that file in a cgi-app directory along with some templates and

have a working app. It was good enough that I could use it for work

and I built more than a few sites with it (including at least one

version of this site).

I quickly added support for other template libraries and

mod_python, the Python equivalent to

mod_perl.

I don’t remember ever really publicising it beyond an

announcement here

and I didn’t think anyone else was really using it. Shockingly

recently though, I got an email offering me some consulting work for a

company that had built their product on it, were still using it, and

wanted to make some changes. That was a weird combination of pride and

horror…

By around 2006 or 2007, the landscape had changed dramatically.

Ruby on Rails 1.0 was released in 2005

and turned everything on its head. Other Python developers like me had

their own little frameworks and were writing code but there wasn’t

really any consensus. Django,

TurboGears, web.py, and

others all appeared and had their fans, but none were immediately

superior to the others and developer resources and mindshare were

split between them. In the meantime, RoR was getting all the press and

developer attention. It was a period of frustration for the Python

community.

Out of that confusion and frustration came something pretty neat

though. A few years earlier, PJE had come up with

WSGI,

the Web Server Gateway Interface, but it hadn’t really taken off. WSGI

was a very simple standard for allowing different web servers and

applications to all communicate through a common interface. At some

point the Python community figured out that standardizing on WSGI

would allow for better integration, more portability, simpler

deployment, and allowed for chaining “middleware” components together

in clever ways. Most of the competing frameworks quickly added WSGI

support. Django took a little longer but eventually came around. The

idea was so good that it eventually was adopted by other languages

(eg, Rack for Ruby).

JSON had mostly pushed out XML for data serialization and web

developers were beginning to appreciate

RESTful architecture. I

began thinking about my own applications in terms of small, extremely

focused REST components that could be stitched together into a full

application. I called this approach “microapps” and was fairly

evangelical about it,

even buying ‘microapps.org’ and setting it up as a site full of

resources (I eventually let that domain lapse and it was bought by

spammers so don’t try going there now). Other smart folks like Ian

Bicking were

thinking in similar ways

it seemed like we were really onto something. Maybe we were. I don’t

really want to take any credit for the whole “microservices” thing

that’s popular now, but I do feel like I see echoes of the same

conversations happening again (and we were just rehashing

SOA and

its predecessors; everything comes back around).

Eventually, after writing quite a few of these (mostly in TurboGears),

almost always backed by a fairly simple model in a relational

database, I began to tire of implementing the same sort of glue logic,

mapping HTTP verbs to basic CRUD operations in the database. So I

wrote a little framework. This was

Bourbon (WSGI can be pronounced

“whiskey”).

Bourbon let me essentially define a single database table (using

SQLALchemy, which was the new cool Python ORM) and expose a REST+JSON

endpoint for it (GET to SELECT, POST or PUT to INSERT, PUT

to UPDATE, and DELETE to DELETE) with absolutely minimal

boilerplate. There were hooks where you could add additional

functionality, but you got all of that pretty much out of the box by

defining your schema and URL patterns.

For a number of reasons, I mostly switched to Django shortly after

that, so I never went too far with it, but it was simple and clear and

super useful for prototyping.

In recent years, I’ve built a few apps using

Django Rest Framework and it

has a similar feel (just way more complete).

I don’t have a real point here. I just felt like reminiscing about

some old code. I’ve managed to resist writing a web framework for the

last decade, so at least I’m improving.

By

anders pearson

30 Sep 2017

A little behind the curve on this one (hey, I’ve been busy), but as of today, every Python app that I run is now on Python3. I’m sure there’s still Python2 code running here and there which I will replace when I notice it, but basically everything is upgraded now.

By

anders pearson

24 Sep 2017

One of my favorite bits of the Go standard library is expvar. If you’re writing services in Go you are probably already familiar with it. If not, you should fix that.

Expvar just makes it dead simple to expose variables in your program via an easily scraped HTTP and JSON endpoint. By default, it exposes some basic info about the runtime memory usage (allocations, frees, GC stats, etc) but also allows you to easily expose anything else you like in a standardized way.

This makes it easy to pull that data into a system like Prometheus

(via the expvarCollector)

or watch it in realtime with expvarmon.

I still use Graphite for a lot of my metrics collection and monitoring though and noticed a lack of simple tools for geting expvar metrics into Graphite. Peter Bourgon has a Get to Graphite library which is pretty nice but it requires that you add the code to your

applications, which isn’t always ideal.

I just wanted a simple service that would poll a number of expvar endpoints and submit the results to Graphite.

So I made samlare to do just that.

You just run:

$ samlare -config=/path/to/config.toml

With a straightforward TOML config file that would look something like:

CarbonHost = "graphite.example.com"

CarbonPort = 2003

CheckInterval = 60000

Timeout = 3000

[[endpoint]]

URL = "http://localhost:14001/debug/vars"

Prefix = "apps.app1"

[[endpoint]]

URL = "http://localhost:14002/debug/vars"

Prefix = "apps.app2"

FailureMetric = "apps.app2.failure"

That just tells samlare where your carbon server (the part of Graphite that accepts metrics) lives, how often to poll your endpoints (in ms), and how long to wait on them before timing out (in ms, again). Then you specify as many endpoints as you want. Each is just the URL to hit and what prefix to give the scraped metrics in Graphite. If you specify a FailureMetric, samlare will submit a 1 for that metric if polling that endpoint fails or times out.

There are more options as well for renaming metrics, ignoring metrics, etc, that are described in the README, but that’s the gist of it.

Anyone else who is using Graphite and expvar has probably cobbled something similar together for their purposes, but this has been working quite well for me, so I thought I’d share.

As a bonus, I also have a package, django-expvar that makes

it easy to expose an expvar compatible endpoint in a Django app (which samlare will happily poll).

By

anders pearson

01 Jan 2017

Once

again,

I can’t be bothered to list my top albums of the year, but here’s a

massive list of all the music that I liked this year (that I could

find on Bandcamp). No attempt at ranking, no commentary, just a

firehose of weird, dark music that I think is worth checking out.

By

anders pearson

03 Mar 2016

I would like to share how I use a venerable old technology, GNU Make, to manage the common tasks associated with a modern Django project.

As you’ll see, I actually use Make for much more than just Django and that’s one of the big advantages that keeps pulling me back to it. Having the same tools available on Go, node.js, Erlang, or C projects is handy for someone like me who frequently switches between them. My examples here will be Django related, but shouldn’t be hard to adapt to other kinds of codebases. I’m going to assume basic unix commandline familiarity, but nothing too fancy.

Make has kind of a reputation for being complex and obscure. In fairness, it certainly can be. If you start down the rabbit hole of writing complex Makefiles, there really doesn’t seem to be a bottom. Its reputation isn’t helped by its association with autoconf, which does deserve its reputation for complexity, but we’ll just avoid going there.

But Make can be simple and incredibly useful. There are plenty of tutorials online and I don’t want to repeat them here. To understand nearly everything I do with Make, this sample stanza is pretty much all you need:

ruleA: ruleB ruleC

command1

command2

A Makefile mainly consists of a set of rules, each of which has a list of zero or more prerequisites, and then zero or more commands. At a very high level, when a rule is invoked, Make first invokes any prerequisite rules (if needed), which may have their own stanzas defined elsewhere) and then runs the designated commands. There’s some nonsense having to do with requiring actual TAB characters in there instead of spaces for indentation and more syntax for comments (lines starting with #) and defining and using variables, but that’s the gist of it right there.

So you can put something extremely simple (and pointless) like

say_hello:

echo make says hello

in a file called Makefile, then go into the same directory and do:

$ make say_hello

echo make says hello

make says hello

Not exciting, but if you have some complex shell commands that you have to run on a regular basis, it can be convenient to just package them up in a Makefile so you don’t have to remember them or type them out every time.

Things get interesting when you add in the fact that rules are also interpreted as filenames and Make is smart about looking at timestamps and keeping track of dependencies to avoid doing more work than necessary. I won’t give trivial examples to explain that (again, there are other tutorials out there), but the general interpretation to keep in mind is that the first stanza above specifies how to generate or update a file called ruleA. Specifically, other files, ruleB and ruleC need to have first been generated (or are already up to date), then command1 and command2 are run. If ruleA has been updated more recently than ruleB and ruleC, we know it’s up to date and nothing needs to be done. On the other hand, if the ruleB or ruleC files have newer timestamps than ruleA, ruleA needs to be regenerated. There will be meatier examples later, which I think will clarify this.

The original use-case for Make was handling complex dependencies in languages with compilers and linkers. You wanted to avoid re-compiling the entire project when only one source file changed. (If you’ve worked with a large C++ project, you probably understand why that would suck.) Make (with a well-written Makefile) is very good at avoiding unnecessary recompilation while you’re developing.

So back to Django, which is written in Python, which is not a compiled language and shouldn’t suffer from problems related to unnecessary and slow recompilation. Why would Make be helpful here?

While Python is not compiled, a real world Django project has enough complexity involved in day to day tasks that Make turns out to be incredibly useful.

A Django project requires that you’ve installed at least the Django python library. If you’re actually going to access a database, you’ll also need a database driver library like psycopg2. Then Django has a whole ecosystem of pluggable applications that you can use for common functionality, each of which often require a few other miscellaneous Python libraries. On many projects that I work on, the list of python libraries that get pulled in quickly runs up into the 50 to 100 range and I don’t believe that’s uncommon.

I’m a believer in repeatable builds to keep deployment sane and reduce the “but it works on my machine” factor. So each Django project has a requirements.txt that specifies exact version numbers for every python library that the project uses. Running pip install -r requirements.txt should produce a very predictable environment (modulo entirely different OSes, etc.).

Working on multiple projects (between work and personal projects, I help maintain at least 50 different Django projects), it’s not wise to have those libraries all installed globally. The Python community’s standard solution is virtualenv, which does a nice job of letting you keep everything separate. But now you’ve got a bunch of virtualenvs that you need to manage.

I have a seperate little rant here about how the approach of “activating” virtualenvs in a particular shell environment (even via virtualenvwrapper or similar tools) is an antipattern and should be avoided. I’ll spare you most of that except to say that what I recommend instead is sticking with a standard location for your project’s virtualenv and then just calling the python, pip, etc commands in the virtualenv via their path. Eg, on my projects, I always do

$ virtualenv ve

at the top level of the project. Then I can just do:

$ ./ve/bin/python ...

and know that I’m using the correct virtualenv for the project without thinking about whether I’ve activated it yet in this terminal (I’m a person who tends to have lots and lots of terminals open at a given time and often run commands from an ephemeral shell within emacs). For Django, where most of what you do is done via manage.py commands, I actually just change the shebang line in manage.py to #!ve/bin/python so I can always just run ./manage.py do_whatever.

Let’s get back to Make though. If my project has a virtualenv that was created by running something along the lines of:

$ virtualenv ve

$ ./ve/bin/pip install -r requirements.txt

And requirements.txt gets updated, the virtualenv needs to be updated as well. This is starting to get into Make’s territory. Personally, I’ve encountered enough issues with pip messing up in-place updates on libraries that I prefer to just nuke the whole virtualenv from orbit and do a clean install anytime something changes.

Let’s add a rule to a Makefile that looks like this:

ve/bin/python: requirements.txt

rm -rf ve

virtualenv ve

./ve/bin/pip install -r requirements.txt

If that’s the only rule in the Makefile (or just the first), typing

$ make

At the terminal will ensure that the virtualenv is all set up and ready to go. On a fresh checkout, ve/bin/python won’t exist, so it will run the three commands, setting everything up. If it’s run at any point after that, it will see that ve/bin/python is more recently updated than requirements.txt and nothing needs to be done. If requirements.txt changes at some point, running make will trigger a wipe and reinstall of the virtualenv.

Already, that’s actually getting useful. It’s better when you consider that in a real project, the commands involved quickly get more complicated with specifying a custom --index-url, setting up some things so pip installs from wheels, and I even like to specify exact versions of virtualenv and setuptools in projects I don’t have to think about what might happen on systems with different versions of those installed. The actual commands are complicated enough that I’m quite happy to have them written down in the Makefile so I only need to remember how to type make.

It all gets even better again when you realize that you can use ve/bin/python as a prerequisite for other rules.

Remember that if a target rule doesn’t match a filename, Make will just always run the commands associated with it. Eg, on a Django project, to run a development server, I might run:

$ ./manage.py runserver 0.0.0.0:8001

Instead, I can add a stanza like this to my Makefile:

runserver: ve/bin/python

./manage.py runserver 0.0.0.0:8001

Then I can just type make runserver and it will run that command for me. Even better, since ve/bin/python is a prerequisite for runserver, if the virtualenv for the project hasn’t been created yet (eg, if I just cloned the repo and forgot that I need to install libraries and stuff), it just does that automatically. And if I’ve done a git pull that updated my requirements.txt without noticing, it will automatically update my virtualenv for me. This sort of thing has been incredibly useful when working with designers who don’t necessarily know the ins and outs of pip and virtualenv or want to pay close attention to the requirements.txt file. They just know they can run make runserver and it works (though sometimes it spends a few minutes downloading and installing stuff first).

I typically have a bunch more rules for common tasks set up in a similar fashion:

check: ve/bin/python

./manage.py check

migrate: check

./manage.py migrate

flake8: ve/bin/python

./ve/bin/flake8 project

test: check flake8

./manage.py test

That demonstrates a bit of how rules chain together. If I run make test, check and flake8 are both prerequisites, so they each get run first. They, in turn, both depend on the virtualenv being created so that will happen before anything.

Perhaps you’ve noticed that there’s also a little bug in the ve/bin/python stanza up above. ve/bin/python is created by the virtualenv ve step, but it’s used as the target for the stanza. If the pip install step fails though (because of a temporary issue with PyPI or just a typo in requirements.txt or something), it will still have “succeeded” in that ve/bin/python has a fresher timestamp than requirements.txt. So the virtualenv won’t really have the complete set of libraries installed there but subsequent runs of Make will consider everything fine (based on timestamp comparisons) and not do anything. Other rules that depend on the virtualenv being set up are going to have problems when they run.

I get around that by introducing the concept of a sentinal file. So my stanza actually becomes something like:

ve/sentinal: requirements.txt

rm -rf ve

virtualenv ve

./ve/bin/pip install -r requirements.txt

touch ve/sentinal

Ie, now there’s a zero byte file named ve/sentinal that exists just to signal to Make that the rule was completed successfully. If the pip install step fails for some reason, it never gets created and Make won’t try to keep going until that gets fixed.

My actual Makefile setup on real projects has grown more flexible and more complex, but if you’ve followed most of what’s here, it should be relatively straightforward. In particular, I’ve taken to splitting functionality out into individual, reusable .mk files that are heavily parameterized with variables, which then just get includeed into the main Makefile where the project specific variables are set.

Eg, here is a typical one. It sets a few variables specific to the project, then just does include *.mk.

The actual Django related rules are in django.mk, which is a file that I use across all my django projects and should look similar to what’s been covered here (just with a lot more rules and variables). Other .mk files in the project handle tasks for things like javascript (jshint and jscs checks, plus npm and webpack operations) or docker. They all take default values from config.mk and are set up so those can be overridden in the main Makefile or from the commandline. The rules in these files are a bit more sophisticated than the examples here, but not by much. [I should also point out here that I am by no means a Make expert, so you may not want to view my stuff as best practices; merely a glimpse of what’s possible.]

I typically arrange things so the default rule in the Makefile runs the full suite of tests and linters. Running the tests after every change is the most common task for me, so having that available with a plain make command is nice. As an emacs user, it’s even more convenient since emacs’ default setting for the compile command is to just run make. So it’s always a quick keyboard shortcut away. (I use projectile, which is smart enough to run the compile command in the project root).

Make was originally created in 1976, but I hope you can see that it remains relevant and useful forty years later.